The Future of 3D Generative AI with Meshy’s CEO Ethan Hu

In this interview, Ethan Hu, the CEO of Meshy AI. Only in 2024, over 20 million 3D assets were generated thanks to Meshy. In this conversation, we will dive into the world of 3D Gen AI.

You will discover:

- How are professionals using 3D assets generated with ai

- How is the industry reacting to the rapid advancements

- Look more broadly at 3D content for various use cases, from visualization to full games generated with AI

- An ambitious prediction about the future of 3d genai

Before getting into the interview, I wanted to quickly introduce you to today’s sponsor Gracia AI. Gracia AI is the only app that allows you to experience Gaussian Splatting volumetric videos on a standalone headset, either in VR or MR.

It is a truly impressive experience and I recommend you to try it out right now on your Quest or PC-powered headset.

Interview with Ethan Hu

What are the most common misconceptions about 3D generative AI?

Ethan Hu: One of the biggest misconceptions is that AI-generated 3D models are messy and unusable because of poor UVs and topology. People often comment on our YouTube and Instagram posts, saying, “Look at this garbage!” But the reality is, most users don’t use AI-generated models as-is. They modify them in tools like ZBrush or use them for conceptualization. At Meshy, we also support Quad meshes and a retopology feature that reduces a model from a million faces to around 5,000, making it easier for further use in animation or subdivision. So, while AI-generated models aren’t perfect yet, they’re already incredibly useful in many workflows, especially in game production, film, AR, and VR.

Yet we are still “not there”. What are the current limitations of 3D generative AI?

Ethan Hu: Absolutely, there are limitations. AI isn’t replacing 3D artists anytime soon. Think of it like Photoshop. It didn’t replace painters; it just gave them a better tool. Similarly, AI tools like Meshy are here to enhance productivity, not replace creativity. A year ago, people said AI-generated models weren’t worth modifying, but today, you can import them into ZBrush or Blender and make meaningful changes. The key is that AI is a tool, not a replacement for human creativity. It helps artists focus on design rather than tedious tasks like basic sculpting.

Can you give us some examples of how 3D artists are using AI-generated assets in their workflows?

Ethan Hu: Sure! Many artists use Meshy to generate rough meshes, which they then import into ZBrush to add details. This is much faster than starting from scratch. Others use Meshy for quick prototyping, especially concept artists who want a 3D view of their 2D designs. They pass these 3D models along with their 2D concepts to downstream artists, giving them a better reference. Level artists also use Meshy to generate assets and arrange them in scenes, speeding up the level design process. Instead of searching for models on Sketchfab or Unity Store, they can create exactly what they need in minutes.

Are people using text-to-3D or image-to-3D more often?

Ethan Hu: It’s about half and half, but the users are very different. Game studios tend to use image-to-3D because they need assets that match their concept art. On the other hand, people in VR, XR, or 3D printing often use text-to-3D because it’s more creative and doesn’t require an image. So, it really depends on the use case.

Are people mostly creating characters, or are they also generating props, assets, and environments?

Ethan Hu: It depends on the use case. For 3D printing, characters are popular. But in game development, especially for AAA studios, AI-generated characters are less common because they’re often hero assets that require high quality. Instead, studios use AI for props like chairs, tables, or rocks, which are needed in large quantities but don’t require the same level of detail. So, it really varies based on the project.

You’ve been working on Meshy for over a year now. What have you learned during this time?

Ethan Hu: We’ve learned a lot! When we released Meshy One, people called it garbage. But with each version, we’ve made significant improvements. Meshy Two brought better textures, Meshy Three improved mesh quality, and Meshy Four combined both. Now, with Meshy Five, we’re solving hard surface modeling, which was a big challenge. The feedback from AAA studios has been positive: they see the potential and are starting to use our models in their games. It’s been a journey of staying optimistic, listening to users, and continuously improving.

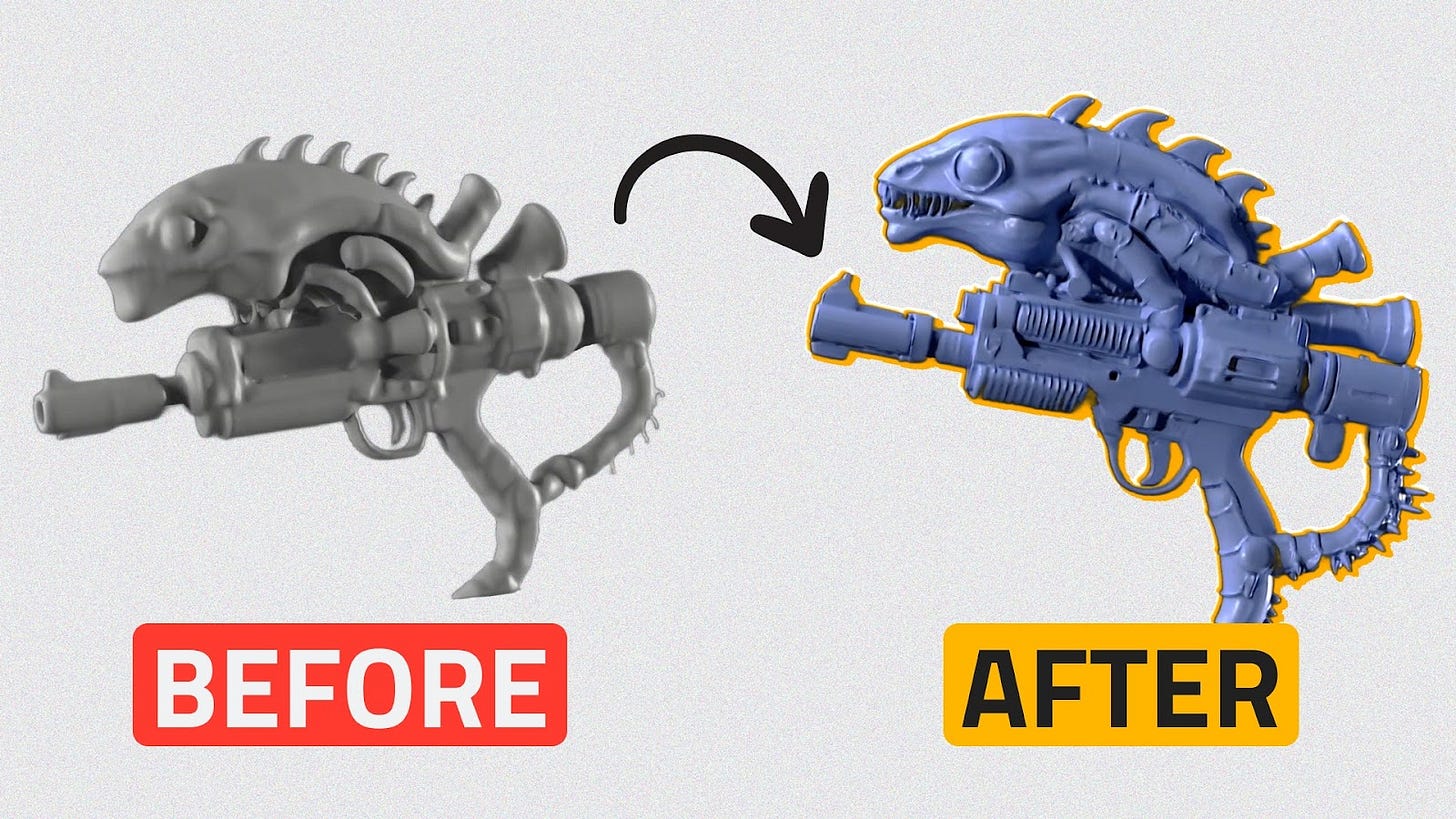

What are the biggest improvements in Meshy Five?

Ethan Hu: Meshy Five focuses on hard surface modeling, which was a major pain point. Previously, edges on objects like helmets or swords looked blurry, but now they’re sharp and clean. We’ve also improved the alignment between the input image and the generated 3D model, so users get what they want more consistently. Texture quality has also been significantly upgraded, with better alignment between textures and meshes.

You’ve introduced rigging and animation features. How are users leveraging these?

Ethan Hu: Users love the ability to preview animations directly in Meshy. They can rig a character, animate it, and see how it looks in motion before exporting it to Unity or Unreal Engine. Some users even capture specific poses for 3D printing or use them as references for 2D art. It’s a versatile feature that adds a lot of value, especially for game developers and animators.

What’s your take on Gaussian splatting? Is it complementary or competitive with mesh-based methods?

Ethan Hu: Gaussian splatting is fantastic for rendering environments efficiently, but it’s not ideal for extracting meshes. For the next 5-10 years, meshes will remain the dominant representation in 3D workflows because they’re deeply integrated into current workflows. Gaussian splatting is more of a complementary technology, especially for environments, but it’s not replacing meshes anytime soon.

Where do you see the future of fully AI-generated games?

Ethan Hu: It’s an exciting direction, but we need to ask why we’re doing it. Are players going to enjoy AI-generated games, or is it just a gimmick? I think the more practical application is using AI to prototype games quickly. For example, a developer could type, “Create a platformer with a rabbit as the main character,” and get a playable prototype in minutes. That’s where I see the most potential.

What’s next for Meshy? What can we expect in the next year or two?

Ethan Hu: Our goal is to achieve production-ready quality. We’re about 70-80% there, and I believe we’ll reach Flux-level quality for 3D models within the next year. We’re also focusing on generating models optimized for mobile games, where low poly counts are crucial. Ultimately, we want to free artists from labor-intensive tasks so they can focus on creativity. The technology is evolving fast, and we’re excited to keep pushing the boundaries.

That’s it for today, and don’t forget to subscribe to the newsletter and refer it to a friend if you find this interesting.

Check out the full Video interview here

See you next week